This story from the private The Wire newsletter deserves broad circulation:

Facebook’s Super Spreaders

What message is Beijing trying to send?

Last Friday afternoon, just as President Joe Biden was boarding Marine One for a weekend at Camp David, a reporter yelled out a question: “On Covid misinformation, what is your message to platforms like Facebook?” Above the din of the helicopter, Biden responded with his quintessential frankness: “They’re killing people.”

His comment didn’t come out of nowhere. Just the day before, the Surgeon General, in his first formal advisory of the Biden administration, issued a stark warning about Covid-19 related misinformation, specifically calling out social media companies for providing a platform for the dangerous inaccuracies. Ron Klain, Biden’s chief of staff, has named Facebook as a major source of anti-vaccine fear-mongering. And on the same day that Biden headed for Camp David, Jen Psaki, the White House press secretary, made a point of saying that Facebook should be doing more, especially because some of the misleading information comes from two of America’s biggest adversaries: Russia and China.

“A lot of people on these platforms, they’re not discriminating, as you all know, [about] the source of the information,” Psaki said.

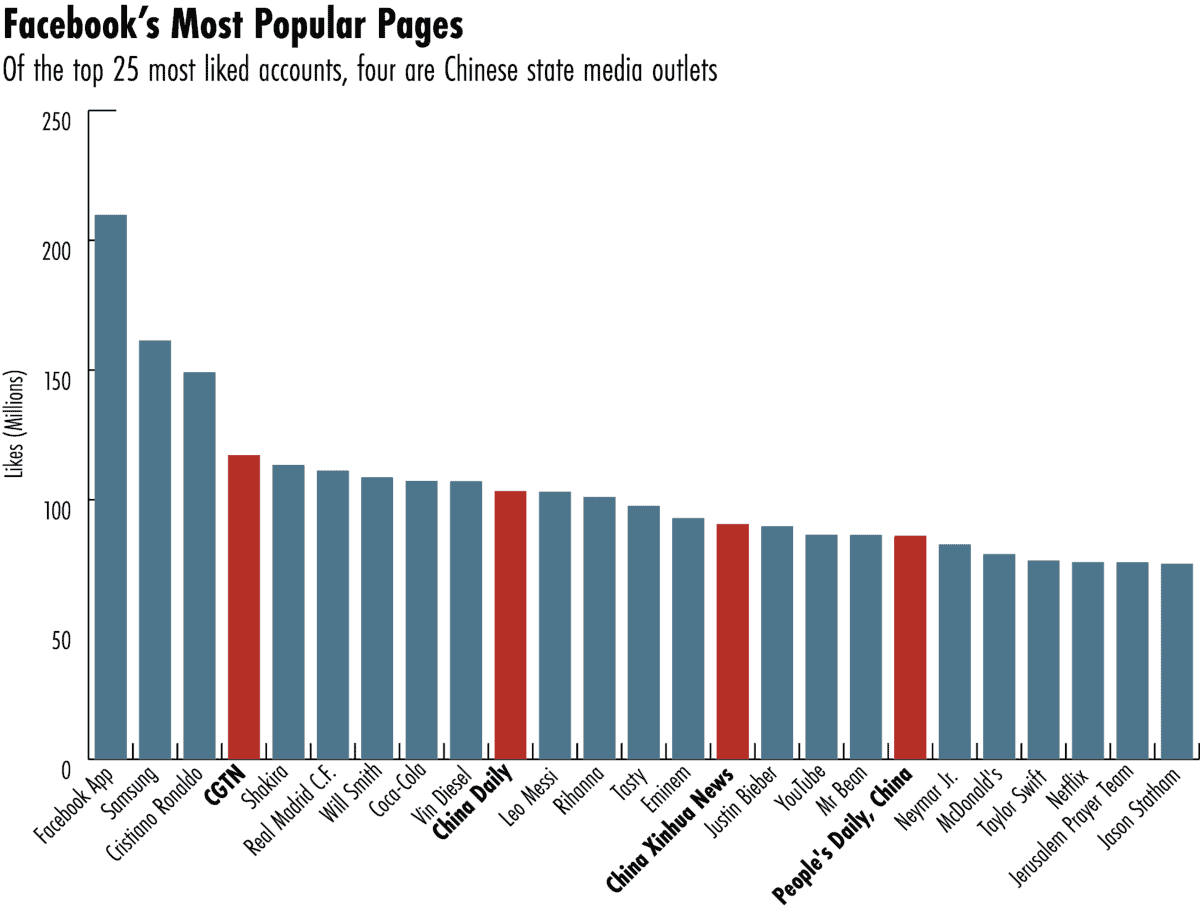

Many Facebook users, for instance, are likely unaware of the Chinese government’s huge presence on the platform. Facebook is not allowed to even operate inside China, and yet, somewhat amazingly, of the platform’s top 25 most “liked” accounts — a list that includes Shakira and Coca-Cola — four are Chinese state media outlets, according to Social Blade data. No news outlets from other countries even make the list.

The audience for these Facebook campaigns is primarily English speaking. According to a Stanford Internet Observatory report, “the seven main English-language Chinese state media Facebook pages have an average of 70.9 million followers, providing the Party apparatus considerable reach.” And because these pages often buy Facebook ads, they can “proactively target users worldwide … even if the users had never previously indicated interest in Chinese state communications.”

Plus, while it is harder to quantify the presence of inauthentic activity — and attribute it to the Chinese government — a wide range of misinformation experts told The Wire that Chinese state actors are undeniably engaged with covert campaigns on Facebook as well.

“They want to control the narrative,” says Albert Zhang, a researcher with the Australian Strategic Policy Institute (ASPI) and the author of a recent report on the Chinese government’s information campaigns on Western social media. “Having official accounts on Facebook and buying ads is one way to do that. But their accounts use covert tactics as well, such as interacting with fake accounts to boost their messaging.” In the past two years, Facebook has taken action at least four times related to coordinated campaigns originating in China.

In a comment to The Wire, a Facebook spokesperson said the company was taking steps such as labelling state media, fact checking posts, and removing inauthentic behavior from the platform.

Foreign governments aren’t the only actors driving Covid-19 related misinformation on Facebook, of course. Domestic campaigns from extremist media personalities, conspiracy theorists, and even former President Trump have spread grossly inaccurate information. But recently, observers say, Chinese campaigns have jumped on the bandwagon, and the engagement they’ve received in the process is indicative of the platform’s larger problems.

“Having these accounts puts them in the game,” says Vivien Marsh, a visiting lecturer at University of Westminster who has done extensive research comparing the news content of CGTN and BBC. “Sometimes their content is very anodyne — you don’t know whether it is Chinese or American or Russian. But sometimes it is very Chinese content. So it gives them the opportunity to slide into people’s feeds.”

This is a relatively new strategy for Beijing. For a long time, experts described China’s activities on Western social media as an attempt at “telling China’s story well,” a phrase that was first used in 2013 by Xi Jinping to describe overseas propaganda efforts. Namely, China’s messaging was focused on painting a positive vision of China with mostly apolitical content — pandas frolicking in Chinese zoos, for example, or beautiful landscapes in rural provinces.

This approach, notes Charity Wright, a cyber threat intelligence analyst at Recorded Future, a cyber security firm, differed from Russia’s overseas propaganda, which focused on criticizing and undermining other powers. “Russia is an agent of chaos,” Wright says. “But China conducts a completely different operation. China is very interested in their own image. Externally, they want to paint China in a positive light and negate any negative news.”

Last year, as tensions heated up around Covid-19, China found itself inundated with negative news. In addition to many people, including U.S. president Donald Trump, calling Covid-19 “the China virus,” China came under intense criticism and scrutiny for its lack of transparency in the early days of the pandemic, which allowed Covid-19 to spread in the first place. In response, experts say China’s overseas propaganda has taken a definitively more aggressive turn, with Beijing’s Facebook campaigns increasingly focused on Covid-19 related misinformation.

“The primary goal is setting up an alternative mechanism to shape global narratives about China,” says Marcel Schliebs, a researcher at the Oxford Internet Institute who has been tracking China’s covert and overt activities on Facebook and Twitter. “They want some way to intervene when they come under criticism.”

Facebook has proven to be one of their most effective interventions. While Chinese campaigns are present on all Western social media platforms, including Twitter and YouTube, researchers have found that Facebook is particularly susceptible to manipulation and that state sponsored content tends to receive more engagement on Facebook than other platforms. As public pressure on China over Xinjiang increased, for example, Xinjiang-related tweets from Chinese state media gained less traction on Twitter. But on Facebook, the most engaged with posts that mention Xinjiang still came from Chinese state media outlets, according to a recent Australian Strategic Policy Institute (ASPI) report.

“Everyone says it is the Chinese government’s fault, but it is the platforms that allow them on,” says Maria Repnikova, assistant professor in Global Communication at Georgia State University. “And the fact that they are so popular now is definitely a shift from even a few years ago when I was talking to editors in Beijing and they were saying the U.S. was such a tough audience. This is a victory for them.”

Indeed, in 2014, Mark Zuckerberg, Facebook’s chief executive, gave a speech at Tsinghua University as part of a charm offensive to get access to the massive Chinese market. Facebook, he said in broken Chinese, could be used to “to help other places in the world connect to China.” Today, it turns out, the reality is quite the opposite.

ZUCK’S U-TURN

Earlier this month, Zuckerberg went out of his way to post a patriotic video on Facebook. In front of a picturesque lake background, he crouched on an electric surfboard and held a massive American flag up proudly in the wind. “Happy July 4th! 🇺🇸,” he captioned it, along with the John Denver song “Take Me Home, Country Roads.”

It didn’t take long for the internet to explode with parodies, and posts soon emerged that highlighted the contrast with another viral photo of the Facebook founder: Zuckerberg running through Tiananmen Square in 2016, with a large portrait of Mao Zedong in the background, on a smoggy Beijing day.

“What a difference 5 years can make,” tweeted Bill Birtles, an Australian Broadcasting Corporation reporter, about the juxtaposition between the two photos.

Ever since the Urumqi riots in 2009 — a period of political unrest in China’s northwest Xinjiang region that led to a digital crackdown — Facebook has been banned in the country. And ever since Facebook has been banned, Zuckerberg has devised publicity stunts to get back in. In addition to running through Tiananmen Square — which Zuckerberg captioned, “It’s great to be back in Beijing!” — Zuckerberg has publicly touted his Mandarin lessons, welcomed an official from China’s internet regulator to Facebook’s California campus, tried to create a special censorship tool for the Chinese market, and even asked Xi Jinping to name his unborn child at a White House dinner.

“Zuckerberg used more personalized tactics in Facebook’s explorations of possible China market entry, compared with the other U.S. tech giants,” says Graham Webster, editor in chief of the Stanford–New America DigiChina Project at Stanford University and a fellow at New America, a Washington, D.C.-based think tank. “Some of this was Zuckerberg, as a confident individual, believing he could cut through and accomplish what other companies couldn’t or wouldn’t.”

But now, after years of fighting that battle, Zuckerberg seems to have given up. In addition to waving the American flag for his millions of followers to see, he has started publicly criticizing China. In a speech at Georgetown University in 2019, for example, he warned against allowing Chinese values to determine American free expression, telling the assembled audience that the U.S. faces a stark choice: “Which nation’s values are going to determine what speech is going to be allowed for decades to come?”

Last summer, at an antitrust hearing, he warned lawmakers that it was “well documented that the Chinese government steals technology from American companies.” He has also lobbied aggressively against allowing TikTok — a significant rival — unfettered access to the U.S. market due to its Chinese origin.

But Zuckerberg’s public U-turn on China doesn’t tell the full story. Analysts estimate that Facebook’s largest source of advertising revenue outside of the U.S. is China, both from Chinese government agencies, state media and companies attempting to reach audiences abroad. The revenue from these ad sales is estimated to be $5 billion. (Facebook’s total ad sales in 2020 were $84 billion.) A Facebook spokesperson told The Wire that the company does not break down revenue by country, but denied that China is its biggest source outside the United States.

Last year, Facebook even set up a team in Singapore to focus on lucrative China ad sales, and the company announced in a Chinese statement on WeChat that “Facebook is committed to becoming the best marketing platform for Chinese companies going abroad.”

At the same time that Facebook has been courting Chinese ads, the platform has become an increasingly important venue for Chinese state media. Over the past year, according to the Oxford Internet Institute’s Schliebs, CGTN received 182 million reactions, 2.56 million comments, and 2.62 million shares. This includes 171 million likes for CGTN, which is more than RT [the Russian state outlet], BBC News, and The New York Times combined. For comparison, The New York Times only received 57 million likes on Facebook last year.

Experts say that Beijing has figured out how to game Facebook’s algorithm. For example, state media outlets and diplomats, along with what seem to be inauthentic accounts, have developed a strategy of coordinated link sharing, in which different accounts share the same text or content within minutes of each other. This, in turn, amplifies the content and makes it more visible across the platform. Facebook’s Community Standards prohibits this type of behavior, stating that users cannot post, share, or engage with content “either manually or automatically, at very high frequencies.” But experts say it is difficult to definitively prove that the content is coordinated, and it is even more difficult to attribute that coordination to the Chinese state.

Most of the posts from the People’s Daily are click-baity content designed to get eyeballs and to soften the image of China… But once they have gotten a following, then they will throw something in that is jarring: like comparing Hong Kong protesters to ISIS.

Sarah Cook, research director for China, Hong Kong, and Taiwan at Freedom House

“There is coordination there,” says Schliebs. “The pro-China actors are creating super spreader accounts.”

It was a strategy years in the making. According to David Bandurski, the co-director of the China Media Project, China realized early on in the Xi era that social media platforms in the West could be leveraged to enhance the country’s “discourse power.”

“Among China’s official think-tankers and strategizers, there was a recognition that these platforms were both powerful and readily accessible to the Chinese Party-state,” says Bandurski.

China Daily, the country’s state-run English language newspaper, was at the forefront of the Facebook push, creating an account in 2009, soon after the government allocated significant resources for the global expansion of state media. People’s Daily and Global Times — two other state media outlets — followed suit by setting up their own accounts in 2011. Through a combination of positive human interest stories designed to drive clicks and some coordinated inauthentic behavior, the accounts started to enjoy increased engagement numbers around 2015, according to Schliebs. At first, this content was only in English, but state outlets soon expanded to include accounts in other languages, such as Spanish and Arabic, further expanding their global audience.

Beijing put the new apparatus to the test in 2018, for the Taiwan local election. Given its strategic importance and proximity to Beijing, analysts say Taiwan is a regular target of China’s misinformation campaigns. In the 2018 elections, for instance, the Chinese government amplified content favoring the Nationalist Party, or Kuomintang, which has closer ties to Beijing. Taiwanese officials — and Facebook itself — were caught off guard by the coordinated effort, and the Nationalist Party, in part due to the misinformation campaigns, performed very well in the election.

Since then, China’s Facebook operation has ramped up both in Taiwan and elsewhere. A recent study by Taipei-based DoubleThink Lab, for instance, showed that the Chinese state attempted to undermine and delegitimize the 2020 elections in Taiwan with a mix of both covert and overt information campaigns. During the Hong Kong protests, Beijing also attempted to portray the protestors as violent extremists with online misinformation campaigns.

Sarah Cook, research director for China, Hong Kong, and Taiwan at Freedom House, says that the strategy is usually subtle. “Most of the posts from the People’s Daily are click-baity content designed to get eyeballs and to soften the image of China, like old Chinese people doing cool stunts,” she says. “But once they have gotten a following, then they will throw something in that is jarring: like comparing Hong Kong protesters to ISIS.”

These campaigns, according to Schliebs, have been particularly salient in developing countries without a longstanding media landscape and historically negative attitudes towards China.

“Looking at it from a global perspective, we may be underestimating the effectiveness,” says Schliebs. “We need to distinguish between their goals in different geographies.”

For the past year, however, two of China’s main goals have applied worldwide: deflecting blame for Covid-19 and, more recently, undermining Western vaccines. And while everyone can agree Beijing’s Covid-19 misinformation campaigns are gaining traction, no consensus has emerged about what Facebook should do about it.

THE MILLION DOLLAR QUESTION

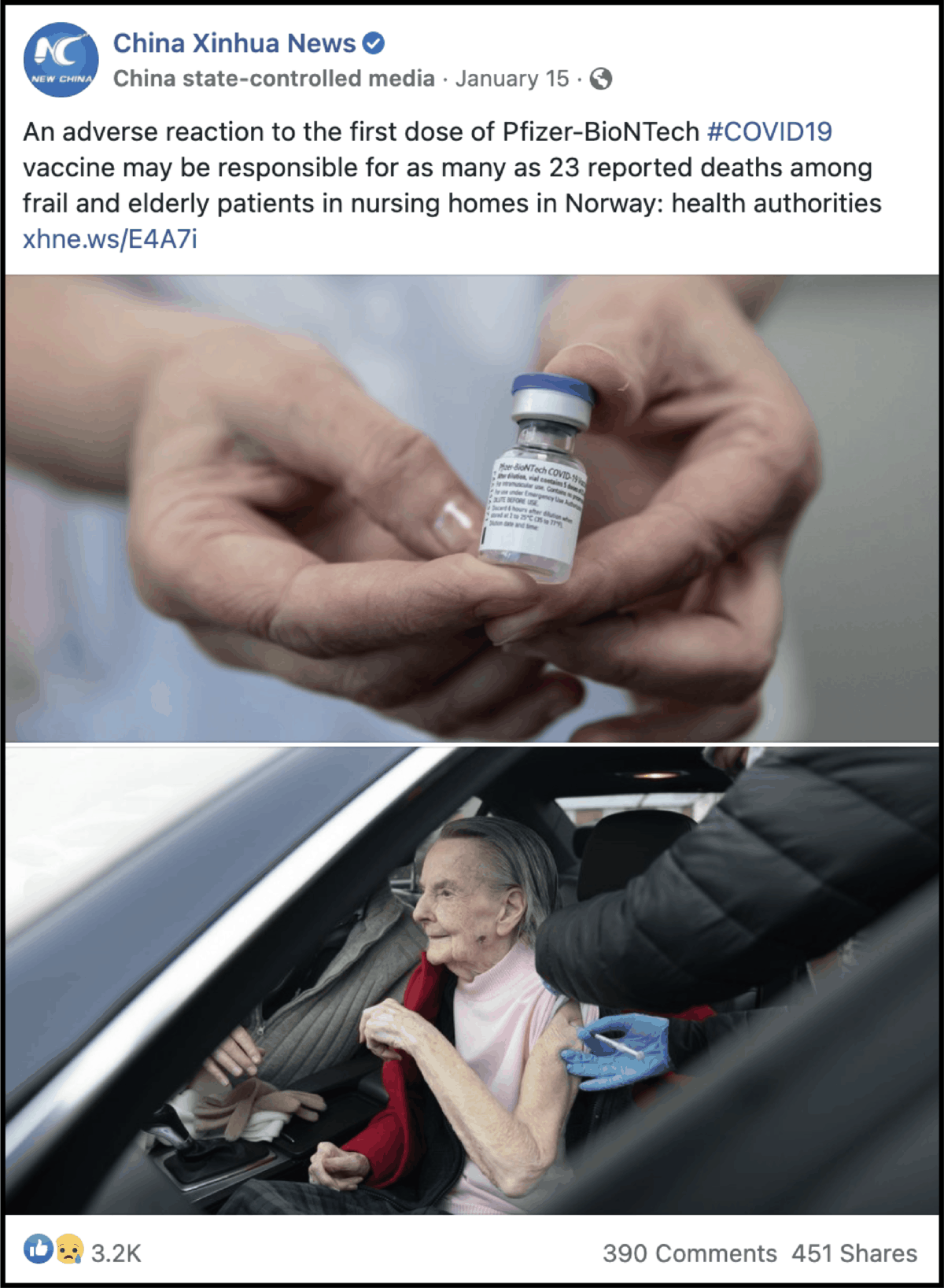

The Facebook post was simple, if evocative. A photo depicts an elderly woman, wearing a pink turtleneck, sitting in her car as a health worker sticks a needle into her wrinkled arm. The caption reads: “An adverse reaction to the first dose of Pfizer-BioNTech #COVID19 vaccine may be responsible for as many as 23 reported deaths among frail and elderly patients in nursing homes in Norway.”

Posted on the account of Xinhua News, a state-run Chinese news outlet, on January 15, it soon racked up 3,200 reactions, 390 comments, and 451 shares. One user commented, “British experts questioned that the effective rate of Pfizer and Mirderna [sic] vaccines was only 19-29%, and the number of test reports seriously violated the science rules, but why the US CDC did not respond and explain ?….Is this different from killing people? Is politics more important than human life?”

Another user replied, “The depopulation has started and we are all fools not to see through this BS. They got us where they wanted us…sheep,” quickly collecting 35 reactions and eight comments. Another user simply responded, “I don’t think I will consider Pfizer but will not hesitate to go for sinovax.”

Researchers say that Facebook is uniquely susceptible to misinformation campaigns in part because it is difficult to get information on individual users — like the ones commenting about the Xinhua article — and then, if inauthentic behavior is found, flag it for the platform to take down.

Ironically, however, this lack of transparency about users was designed to respond to another Facebook controversy. In the wake of the Cambridge Analytica scandal, in which a British consulting firm harvested personal information from millions of people without consent in order to aid the Trump campaign, Facebook strengthened its rules around third parties accessing users’ personal data. At the time, this seemed like a reasonable response to a giant problem. But, as a result, third-party researchers can no longer access data on individual users, limiting them to only exploring broad trends rather than helping to identify inauthentic accounts.

“Coming off the Cambridge Analytica scandal, they put in place very strict data protection rules,” says ASPI’s Zhang. “There is a balance, but Facebook has overcompensated.”

Now, the onus is almost entirely on Facebook to identify and take down misinformation campaigns — an effort that, analysts say, it is starting to take more seriously. In September, for example, Facebook removed a network of 150 fake accounts originating from China that were posting about American politics, the South China Sea, and Hong Kong.

The company has also pushed back aggressively against the Biden administration’s recent criticism regarding Covid-19 misinformation. “We will not be distracted by accusations which aren’t supported by the facts,” the company said in a statement responding to Biden’s comment. “The fact is that more than 2 billion people have viewed authoritative information about COVID-19 and vaccines on Facebook, which is more than any other place on the internet.”

“Facebook has made huge strides,” says Wright, the analyst at Recorded Future. But still, she says, more transparency about how the platform is handling misinformation campaigns is needed. “We have to know what is being detected, what is being taken down and why.”

Twitter, for instance, generally releases specific information about the accounts and the activities that resulted in the company taking them down — a system researchers say Facebook should model.

“If Twitter can do it, Facebook can do it,” Puma Shen, the director of DoubleThink Lab, which studies misinformation in Taiwan, says. “They can delete crucial info to protect individual privacy and then release it.”

The Surgeon General’s recent report on Covid misinformation also urges social media companies to be more transparent, stating that “researchers need data on what people see and hear, not just what they engage with, and what content is moderated (e.g., labeled, removed, downranked), including data on automated accounts that spread misinformation.”

In order to tackle the overt side of China’s misinformation campaigns, Facebook, along with other platforms like Twitter and YouTube, started labelling posts from state media last year. Now Facebook posts from Russian, Iranian, and Chinese outlets contain a tag that contextualizes the post — “China state-controlled media.”

While there has been some criticism about the consistency of the labelling guidelines, and where to draw the line of what is or is not state controlled, many analysts agree it has been an effective tool. A China Media Project study about Twitter’s labelling policy, for example, showed that Xinhua, CGTN and People’s Daily received 20 percent fewer likes and retweets after the policy was introduced. Facebook is also now fact checking claims from state-owned media outlets. If a post is found to be misleading, Facebook reduces its distribution and adds an article redirecting users to accurate information.

“They are doing more than they were — they are at least labelling the pages,” says Cook, of Freedom House. “And adding those taglines about the outlets’ Chinese government affiliation is important.”

Do we want to get to a place where we are moderating content based on the country of origin? … Should we be putting up digital borders? That’s, ironically, what China and Russia want.

Gabrielle Lim, researcher with the Technology and Social Change Project at Harvard Kennedy School’s Shorenstein Center and an associate at the Citizen Lab

But besides labelling state outlets and attempting to take down inauthentic behavior, what else could Facebook be doing to slow the spread of covert and overt Chinese state-sponsored misinformation?

The most extreme action would be to deplatform Chinese state media accounts. But most experts who The Wire spoke with agree that this would result in more backlash than progress.

“Do we want to get to a place where we are moderating content based on the country of origin?” asks Gabrielle Lim, a researcher with the Technology and Social Change Project at Harvard Kennedy School’s Shorenstein Center and an associate at the Citizen Lab. “Should we be putting up digital borders? That’s, ironically, what China and Russia want.”

Many analysts say the solution is less about Facebook’s moderation policies and more about the company’s revenue model. As long as Facebook is reliant on ad revenue, including billions of dollars from China and Chinese state media organizations, it will be hard for them to crack down on the misinformation campaigns that are aided by those same media outlets.

The fact that China represents a large source of ad revenue for Facebook, despite not being able to operate in China, must factor into the company’s decision making, says Aynne Kokas, an associate professor of media studies at the University of Virginia who studies Chinese media. “That definitely does dissuade them from taking [Chinese state sponsored misinformation] down,” she says. “This is a problem of Facebook’s business model.”

What Facebook does next, especially now that the Biden administration has put the pressure on, is “the million dollar question,” says Lim, of the Shorenstein Center. But while misinformation on Facebook is certainly a problem, Lim warns that focusing too much on the China element may be a distraction.

“As researchers, we are very wary about the numbers, like the state media’s followers,” she says. “Are these artificially inflated? Only Facebook knows.”

Katrina Northrop is a journalist based in New York. Her work has been published in The New York Times, The Atlantic, The Providence Journal, and SupChina. @NorthropKatrina